AI 101: Large Language Models and Generative AI

This post outlines my process of learning about LLMs from a non-technical perspective. This covers large language models, one foundation model type, specifically and does not include other foundation models that generate images of videos.

First, what is a LLM in plain English?

A large language model (LLM) is a type of AI model developed and trained to understand, generate, and work with human language. LLMs are part of the broader trend in Generative AI, focusing on models that create new content, a departure from the earlier predictive AI models known for excelling in forecasting future outcomes. The 'large' in LLMs denotes several distinguishing characteristics compared to earlier AI models.

First, LLMs are trained on ‘large’ amounts of internet scale text data. The quality of the training data significantly impacts the quality of the model, so the actual training data is typically a carefully curated subset of available internet data.

Second, the number of parameters in a LLM is very 'large'. LLMs can have billions or even hundreds of billions of parameters. For instance, GPT-3 has 175 billion parameters, while GPT-4 is estimated to have 1.7 trillion parameters. Parameters can be thought of as the model's internal variables, each assigned different values based on 'large' amounts of training data.

Third, although not specifically related to being 'large', LLMs are built upon many seminal AI and machine learning techniques, particularly Transformer models. Transformer models are central to LLMs' effectiveness in processing and generating long text segments, understanding context, and maintaining coherence over lengthy conversations or documents.

Lastly, also not directly related to the 'large' aspect, LLMs have proven to be incredibly versatile and adaptable. They can perform a variety of tasks such as Q&A, writing original content, generating code, translating languages, and more. The adaptable architecture of LLMs also means they can be customized for specific use cases. Given the significant computational and resource requirements for training these models (e.g., it's reported that training GPT-4 cost over $100 million at the time), many developers opt to leverage pre-trained models. They then customize these models to fit their needs using techniques such as fine-tuning (i.e., adjusting the parameters) and retrieval-augmented generation (i.e., combining a prompt with additional relevant data and context).

What is with all the buzz?

ChatGPT, a LLM, reignited public interest in the impact and potential of AI when it launched in November 2022. This interest is particularly focused on Generative AI, with LLMs being a specific type of Generative AI. The potentially transformative nature of Generative AI is often compared to that of the internet or mobile devices. To me, the core aspect that makes Generative AI disruptive is its increasing proficiency in performing tasks typically associated with human intelligence, though there is still room for improvement. A shoutout to Retool for a great report, including a list of live use-cases. These tasks are:

Create new content or data that didn’t previously exist: Compared to traditional AI models, which are very good at making predictions about future events or outcomes (e.g., which TikTok video you might want to watch), LLMs and Generative AI models more broadly are very good at creating new content. For instance, this blog post could be written by ChatGPT.

Example Use Cases: Copywriting, coding, presentation generation, website personalization, etc.

Synthesize information and provide answers: LLMs don't just predict the next word in isolation. They consider the entire context of the sentence or conversation. This capability makes LLMs particularly good at synthesizing large amounts of information and providing responses in various forms. Instead of having a person manually reading through numerous PDF documents or scanning multiple websites, LLMs can process textual information to provide the answer you are looking for.

Example Use Cases: Customer service and support chatbots, knowledge base Q&A, recommendation systems, suggesting solutions and fixes to systems, summarizing meeting notes and creating action items, etc.

Execute tasks using natural language: I can ask ChatGPT to format a data table from a PDF into an Excel-like structure or output the details of an invoice in a JSON-like format, and ChatGPT will be able to do this among many other text-based tasks. This is a very powerful capability because LLMs can execute tasks based on natural language, rather than requiring a person to manually perform those tasks or having a developer program specific workflows.

Example Use Cases: Automate end-to-end workflows

“Figuring it out”: Although still in its early days, the concept and development of AI agents are rapidly advancing. Instead of responding to a prompt or series of prompts, these agents are designed to understand an objective and autonomously navigate the steps to achieve it, self-prompting, evaluating, and making adjustments along the way. This is similar to a boss delegating a task and leaving it up to you to determine the best approach to accomplish it. Those who have experienced ChatGPT’s web browsing feature got a glimpse what this can look like. For instance, when ChatGPT attempts to access a website it cannot reach or doesn’t find an answer, it may try another website before returning an answer. The field of AI agents is an emerging area with significant potential impact.

A recurring theme in this post is that we are still at a very early stage of Generative AI development. However, we are entering a phase where Generative AI can create content or synthesize information that is indistinguishable from, or even superior to, what humans can produce through natural language interactions.

From a company’s perspective, LLMs and Generative AI more broadly could profoundly change how customers interact with products or how internal resources are allocated. For instance, company employees may no longer need to search through internal sites for answers, as they could simply ask a tailored LLM instead.

From an IT spend perspective, there may be a disruptive shift in how budgets are allocated. For example, maybe you will no longer need certain SaaS products when a LLM can automate the workflows instead.

Lastly, from a talent perspective, people can offload certain previously time-consuming tasks to LLMs, potentially changing what they spend time on day-to-day. For example, instead of spending hours searching Stack Overflow for answers, a developer might use AI tools like GitHub Copilot to write code or identify bugs.

Generative AI has tremendous potential to be transformational, but like with anything, time will tell the degree and speed of the ultimate impact.

Market Landscape

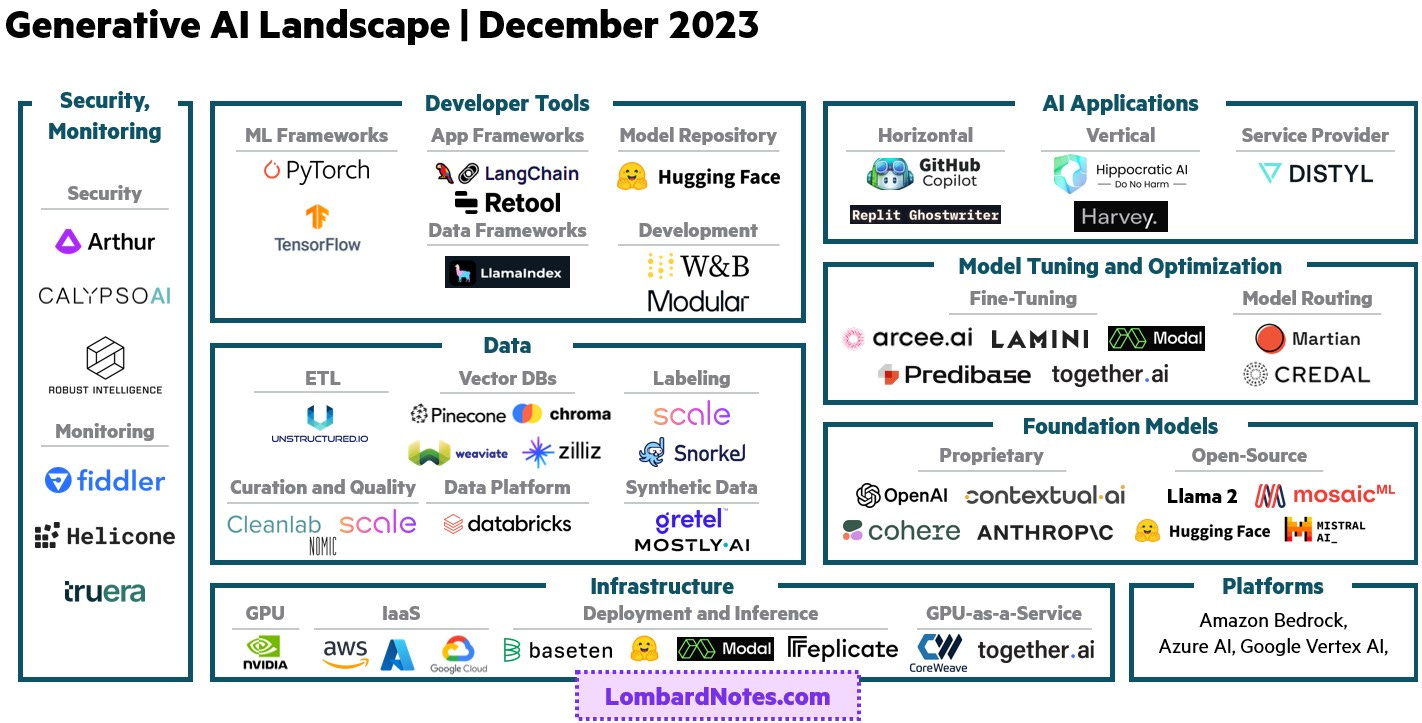

The categories and companies mentioned are not comprehensive but are presented as examples. Additionally, it's important to note that many of the companies listed are not strictly Generative AI companies. There are numerous insightful market maps available, including those from Sequoia and Menlo Ventures.

I will start by pointing out the differences between the market map below and other popular ones. First, my market map is not a cleanly segmented stack. Categories like Data, Developer Tools, and Security/Monitoring are positioned vertically because they interact with almost all other categories. Second, emerging platforms like Amazon Bedrock, which span multiple categories and offer comprehensive solutions for building and running Generative AI applications, are notable. Ideally, this box should be a vertical box as well, but space constraints limited this representation. Given the rapid pace of innovation, this map will likely evolve significantly every few quarters.

Infrastructure

The Infrastructure layer includes hardware and cloud infrastructure that is necessary to run AI workloads, such as training models and running applications. Nvidia is the most notable beneficiary of the Generative AI trend as the dominant developer of GPU chips, with demand far outpacing supply given the computation intensity of Generative AI workloads. Similar to the cloud wave, the hyperscalers are also primary beneficiaries of Generative AI, as many companies default to their cloud infrastructure for their workloads, including AI. The infrastructure layer is where early value is accruing the most, as a significant portion of capital raised by many startups is directly or indirectly going into training and running AI workloads.

Foundation Models

Foundational models are the catalyst of the Generative AI trend, with OpenAI’s ChatGPT sparking public interest. There is still a way to go, but the pace of innovation in the model layer has been incredible, in terms of both proprietary and open-source models. The model layer is also where startups such as OpenAI, Anthropic, Cohere, and others have grabbed the most headlines in terms of the amount of capital raised and valuation for their stages. Given the intense resource requirements to build and train foundational models from the ground up, these startups required huge amounts of capital, and their valuation subsequently became mostly a function of the amount of capital they needed.

It feels like every month, a better performing model and new capabilities are released. If we extrapolate this trend, a fundamental question arises: will the core capabilities of foundational models eventually be commoditized, leading companies to differentiate on ancillary capabilities and feature sets? This is speculative and we are still a ways out before we’ll know.

Another perspective to monitor is whether we will end up in a world where LLMs and smaller, purpose-trained models have a place. Training LLMs is capital intensive, but inference at scale isn’t cheap either, although costs continue to decline as hardware improves. Until costs become a economically viable, there may be a strong business case for training smaller, specialized models for targeted use-cases. Google’s recent release of Gemini, with three different model sizes, demonstrates potential demand for this approach from a general-purpose model perspective.

Model Tuning and Optimization

Model tuning and optimization involve taking pre-trained foundational models and tailoring them for targeted use-cases. Since building and training a foundational model from scratch can be extremely costly and complex, it is often more efficient to leverage an existing model and customize it for different use-cases. This is particularly necessary when companies want to incorporate their own data as they integrate and deploy Generative AI applications in their products. Techniques such as fine-tuning, retrieval-augmented generation, and reinforcement learning from human feedback come into play here. There are a number of standalone solutions that make it easier for companies to tailor their own models. However, I think we will soon see existing cloud and data platforms introduce model tailoring and customization capabilities into their products, as they seek to offer end-to-end solutions, similar to what Amazon Bedrock or Databricks are doing. Doing so abstracts away the complexities of deciding which tools to use and figuring out the best ways to customize models, which will lead to broader adoption, especially for enterprises without the internal expertise to do so.

Data

The quality of models is determined by the quality, not just quantity, of data. Since there is the assumption that most foundation models are largely trained on similar publicly available data, an early popular perspective that I subscribe to as well is proprietary data will be a key differentiating factor for many companies. I would take a step further and say leveraging proprietary data will be a key requirement, not just a differentiation, for companies to drive value which is no different than before in that companies that have large amounts of proprietary data and are able to leverage it tend to have an edge over those that don’t.

In terms of data solutions, besides vector databases and certain techniques such as retrieval augmented generation (RAG) that introduces additional steps in handling data (e.g., chunking and embedding), there doesn’t appear to be specific Generative AI-specific category of data tools required that doesn’t already exist for AI and analytics more broadly. For example, identifying quality data sets (i.e., curation), featurization, synthetic data, data labeling, handing unstructured data, and basic data preparation steps are not unique to Generative AI. Rather, I suspect existing data platforms and tools will add incremental features and capabilities to address specific Generative AI needs. For example, we see Databricks recently incorporating a set of tools to help companies implement RAG into their Generative AI applications.

Developer Tools

Developer tools encompass all the tools and products developers use to build AI models and applications. This layer includes industry-standard frameworks and libraries such as PyTorch and TensorFlow, as well as existing AI tools and products like Hugging Face, Weights & Biases, and Generative AI-specific tools such as LangChain and LlamaIndex. Similar to existing developer tools, the trend is towards open-source with managed services, support, and premium capabilities as monetization business models. Historically, developer tool providers have had difficulty becoming standalone public companies (with notable exceptions like GitLab), and I suspect AI developer tools will follow a similar pattern.

AI Applications

AI applications are where end-users interact with products. Ivana Delevska of Spear Advisors, in her AI Primer, rightly points out that the application layer will likely have the largest addressable market but will also be the most crowded. We have seen and will continue to see many horizontal and vertical enterprise and consumer applications leveraging Generative AI emerge. Additionally, services and consulting companies will play a role in helping enterprises develop customized applications and workflows. In the application layer, the “co-pilot” model appears to be leading in terms of product adoption, with Microsoft’s GitHub Copilot generating over $100M ARR.

Governance, Security and Monitoring

Last but not least, governance, security, and monitoring solutions are critical for moving from experimentation to production, but they typically lag behind advancements in AI more broadly. This layer includes governance products that ensure clear rules and guidelines for data use, model development, and output generation are followed. Security solutions are essential to safeguard sensitive data, prevent unauthorized access, misuse, or malicious injections, and add guardrails. Monitoring and observability are important for model quality, performance, and bias management. Although not specifically called out as a distinct category, model evaluation, exemplified by Stanford’s HELM project, will also play a critical role in model adoption.

Common Workstreams

When it comes to understanding where companies and solutions fit in the broader AI landscape, another useful framework is to consider where solutions fit in terms of workstreams in addition to using the market map above. Broadly, most AI projects can fall into one of the following workstreams, with solutions such as infrastructure, data preparation, developer tools, governance, and monitoring being common underlying elements in most or all of these workstreams:

Training LLMs have significant infrastructure requirements. Hyperscalers dominate here, but due to persistent GPU shortages, a few players who can secure GPUs have also emerged to fill demand. Infrastructure requirements for training differ from those for tailoring LLMs or inference. The data storage, preparation, pre-processing, and the developer tools or libraries within the tools are also distinct from other workstreams. Additionally, privacy, trust, and safety considerations when developing foundational models have fundamental impacts on model appropriateness.

Tailoring pre-Trained LLMs involves a variety of techniques depending on goals and use-cases. Techniques include one or a combination of fine-tuning (including parameter-efficient fine-tuning and LoRa), retrieval augmented generation, and reinforcement learning from human feedback, each with different data requirements and/or steps, as well as different developer and data tools to aid in the various processes. Similarly, infrastructure requirements will differ depending on the techniques (e.g., fine-tuning requires more meaningful compute resources to adjust some model parameters, while RAG doesn’t require adjusting the models but involves steps to prepare, chunk, create embeddings, and manage the overall process to be leveraged by LLMs).

Building LLM applications is highly more focused on developer tools and frameworks, managing data, and leveraging the appropriate LLM or tailored LLM for the application.

Manage, Deploy, Inference, and Maintain LLMs: Hyperscalers also dominate in this area with their end-to-end offerings, but there are a few other competitors as well. Given that we are in the early days of Generative AI and most companies are still experimenting and piloting versus full production, more attention is given to the other workstreams at the moment.

What is needed to go from experimenting to broad production rollout?

With the potential of LLMs and Generative AI more broadly, it is easy to overestimate their current capabilities. It's crucial to keep in mind LLMs are inherently probabilistic systems and those expecting deterministic outcomes are likely to be disappointed. There's a common saying in the AI community that 'hallucinations are a feature, not a bug,' highlighting the probabilistic nature of these systems. While I won't delve into the complexities of bridging probabilistic and deterministic approaches, it's an important aspect to acknowledge. Practically speaking, hallucinations are not acceptable in production applications. For instance, companies need their customer chatbots to provide accurate responses. However, techniques like fine-tuning, RAG, and other advanced approaches can significantly reduce the likelihood of hallucinations.

Not to over-reference Retool, but their survey also does a good job outlining additional challenges when developing AI applications beyond hallucinations, such as model output accuracy, data security, costs, model performance, and challenges in integrating existing data with LLMs. The encouraging news is that even within the past year, there has been considerable progress in addressing each of these concerns. While there are ways to go, it is likely that many of these issues will become less a roadblock sooner than later.

Takeaways

We are in the nascent stages of LLMs and Generative AI, as well as the development of related solutions and tools. However, the high level of excitement surrounding these technologies is well justified. The AI segment of the market is exhibiting resilience, defying a slowdown in the broader venture capital ecosystem driven by the level of interest and enthusiasm for Generative AI. By extension, it should come as no surprise that valuations for AI startups are also very frothy when enthusiasm is combined with large amounts of capital.

For VC investors who have the flexibility, waiting to invest rather than figuring where or what to invest in when enthusiasm and multiples are very elevated is a viable option. Regardless of individual perspectives, the rapid pace of innovation, the influx of talent, the considerable amounts of capital being invested and will be invested, and the overall level of interest in this area are remarkable.

The potential applications are vast, from improving healthcare to transforming the way we work and learn. As with any technology cycles, Altimeter Capital’s founder Brad Gerstner summarized the stage we are in well and paraphrased here: we probably overestimate [the impact of AI] in the very short term, but will likely dramatically underestimate the impact over the decade.

Suggestions, corrections, and feedback for improvement are much appreciated.

Sources and References

https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/

https://retool.com/reports/state-of-ai-2023

https://www.cloudflare.com/learning/ai/what-is-large-language-model/

https://arxiv.org/abs/1706.03762

https://spear-invest.com/outline-ai-primer-deep-dive/

https://huggingface.co/blog/rlhf

https://dr-arsanjani.medium.com/the-generative-ai-lifecycle-1b0c7d9463ec